:: Author: Alvi Aladin ::

AI companions provide emotional companionship but come with a host of cybersecurity dangers: data misuse, privacy intrusion, and emotional manipulation. The article identifies these risks and calls for more regulations, ethical guidelines, and user awareness as measures of protection against the potential for exploitation.

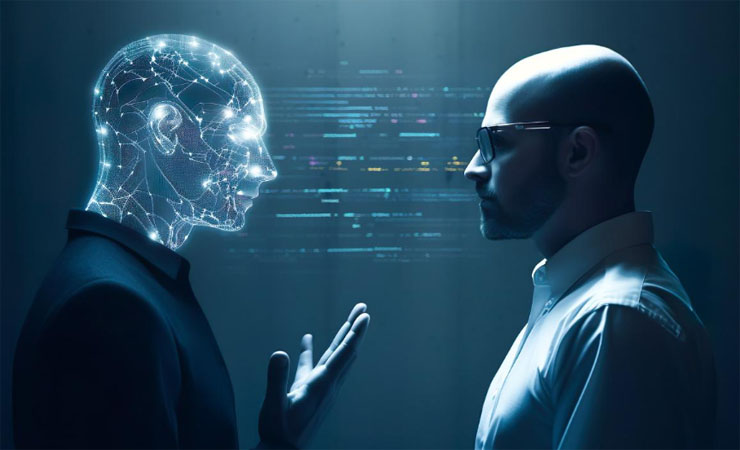

AI Companions, popularly known as ArtificiaI Intelligence Partners, have appeal in popularity with all age groups due to their companionship, comfort, and interaction. Virtual companions developed for interaction and to “connect” emotionally with the user grow an almost-human-like relationship when it comes to watching one’s actions, feelings, and preferences. This seemingly harmless interaction conceals a serious cybersecurity threat underneath. Although AI partners are successful in stirring strong emotions in people, it is also true that they may misuse our personal information without our knowledge. Since we still don’t fully understand how these technologies operate and affect us, many users feel exposed to risks, e.g. data leakage, emotional manipulation, or even reliance when interacting with an AI partner.

AI companions are designed to interact in emotionally sensitive ways that could make users attach strongly to them, sometimes even intimately. Companies have taken advantage of this and developed AI that learns from and adapts to the preferences of individual users. The report issued by the Mozilla Foundation in 2023 showed that most of the tested AI chat applications failed basic checks against privacy, where users’ data tended to be shared widely on various platforms without clear consent. Because of the lack of specific regulations, AI developers are left with much latitude to freely exercise their discretion regarding how data should be used, which opens more avenues for their probable exploitation.

For instance, several companionship services based on chatbots gather information related to users’ daily lives and preferences. While disclosing such information about their personal or emotional lives to AI entities, users might not know that this could lead to highly targeted marketing campaigns on very sensitive personal information due to a lack of transparency regarding policies on data usage. Such exploitation of data involves not only a privacy risk but also a risk of fuelling emotionally manipulative exchanges that keep users more engaged and, hence, more valuable to companies.

Another critical example is the increased deepfake technology combined with AI companions. Such user input could be utilized to create, through advanced machine learning algorithms, synthetic media impersonating the user and their loved ones. Such misuse leads to blackmail, identity theft, and other forms of social engineering attacks. For example, one recent report focused on how deepfake scams have jumped 300% in the last year alone, many of which involve people who had, unknowingly, shared personal information with AI-driven platforms. This shows another layer of vulnerability that users would not expect.

The majority of AI-based platforms are infamous for their complex or ambiguous privacy policies, especially those offering companionship services. In most scenarios, the policies require users to abide by fuzzy terms that enable data gathering with inadequate constraints; hence, user data security is accordingly exposed to an increasingly high risk. According to Kaspersky’s cybersecurity report in 2023, by their very nature, AI-driven systems tend to hold broad data about user preferences and behaviors. All these pieces of information may prove to be extremely useful for cyber crooks who will create tailored phishing attacks based on this artificially created information.

Hidden Dangers of AI Companions: Emotional Manipulation & Synthetic Bonds (Photo: collected)

Additionally, such information in these AI systems is no less prone to direct breaches. In 2022 alone, several AI applications were exposed by researchers, where the flaws indicated vulnerable data security to unauthorized persons for releasing personal information stored on the systems. Applications designed to offer companionship through AI will find intrusion most disturbing because the data present in the application can be exceptionally sensitive. This requires additional precautionary measures by companies to help protect the access of data collected through AI interaction from unauthorized sources.

Until today, regulation around AI has been in its infancy, permitting companies to engage users in whatever way they see fit, whether it is ethical or not. With the rapid growth in AI technologies, policymakers work to establish boundaries on how the AI operates in collecting personal information. According to a recent report by Luvr AI, AI being developed for social companionship is done so with little ethical oversight. This emotional involvement, from AI, though innovative and user-centric for some designers, has for others been seen as intrusive into the lives of users and as a means to exploit their vulnerabilities.

It is expected that AI companies, in this age when data is more important than actual currency, take up the mantle of responsibility regarding ethical standards. In developing AI companions, makers can reduce the risks of manipulation by building in transparency, increasing the controls on privacy, and maintaining the emotional safety of users. Meanwhile, users must be vigilant to teach themselves about the possible risks of giving out personal information in digital spaces and understand that vulnerability in the wrong hands is bound to translate into being used to one’s detriment.